As a reminder, the Google Sandbox effect is observed when a site is indexed by Google, but it does not rank well or at all for keywords it should rank for. The site: command returns pages of your website, proving it is indexed and not banned, but its pages do not appear in search results.

Google denies there is a sandbox where it would park some sites, but it acknowledges there is something in its algorithms which produces a sandbox effect for sites considered as spam. My site would qualify as spam since it was keyword stuffed.

I had registered my site URL in Google Webmaster Tools (GWT) and noticed little to no activity. No indexing of pages and keywords. Fetch as Google would not help. I saw a spike in Crawl Stats for a couple of days, then it fell flat. The site would get no queries, yet the site: command returned its main page.

So, I cleared my site from everything considered spam. I used to following online tools to find my mistakes:

- http://tool.motoricerca.info/spam-detector/

- http://www.seoworkers.com/tools/report.html

- http://www.google.com/safebrowsing/diagnostic?site=<your-site-url-here>

- http://try.powermapper.com/demo/sortsite.aspx

I used Fetch as Google in GWT again, but it did not help get it out of the Sandbox effect. I read all the posts I could find on the net about this topic. Basically, everyone recommends using white hat SEO techniques (more quality content, quality backlinks, etc...) in order to increase the likelihood Google bots will crawl your site again: "It could take months before you get out of the sandbox...!!!"

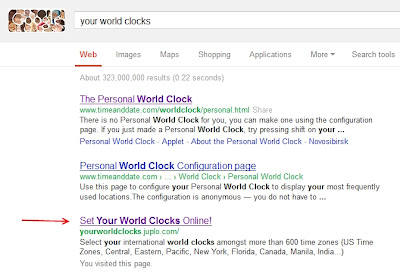

Not true. I have found a way which showed some results in less than a week. My site is now ranked at the 3rd search result position for its niche keyword. I can see my 'carefully selected' keywords in GWT's Content Keywords page.

So, here is the procedure:

- The first step is indeed to clear any spam and bad SEO practices from your site. It is a prerequisite. The following does not work if you don't perform this step with integrity.

- Next, make sure your site has a sitemap.xml and a robots.txt file. Make sure the sitemap is complete enough (i.e., it lists all your site's pages or at least the most important ones).

- Then, register your sitemap.xml in your robots.txt. You can submit your sitemap.xml to GWT, but it is not mandatory.

- Use Fetch as Google to pull your robots.txt in GWT and submit the URL. This makes sure your robots.txt is reachable by Google. It avoids loosing time.

- Make sure there is a <lastmod> tag for each page in your sitemap, and make sure you update it to a recent date when you have updated a page. This is especially important if your page contained spam! Keep updating this tag each time you modify a page.

- If you don't cheat with the <lastmod> tag, I have noticed Google responds well to it.

- Wait for about a week to see unfolding results.

- It is as simple as that. No need for expensive SEO consulting!

I suspect this method works better than everything suggested so far, because Google bots crawl robots.txt frequently. The sitemap is revisited more often and therefore, Google knows faster that pages have been updated. Hence, it re-crawls them faster, which increase the likelihood of proper re-indexing. No need to wait months for bots to come back. It eliminates the chicken and egg issue.

I don't think this method would work with sites which got penalized because of one bought fake backlinks or traffic. I think shaking those bad links and traffic away would be a prerequisite too. If this can't be achieved, some have suggested using a new URL. I have never tried this, because I never bought links and traffics, but I am reckon this would be part of the solution.

Why did I keyword-stuffed my site in the first place? Because, I was frustrated by GWT which would not show relevant indexing data fast enough for new sites, even when clean SEO was applied. Moreover, GWT does not tell when a site falls into the sandbox effect. Google gives you the silent treatment. This is a recipe for disaster when it comes to creating a trusting and educated relationship with publishers.

Not everyone is a evil hacker!